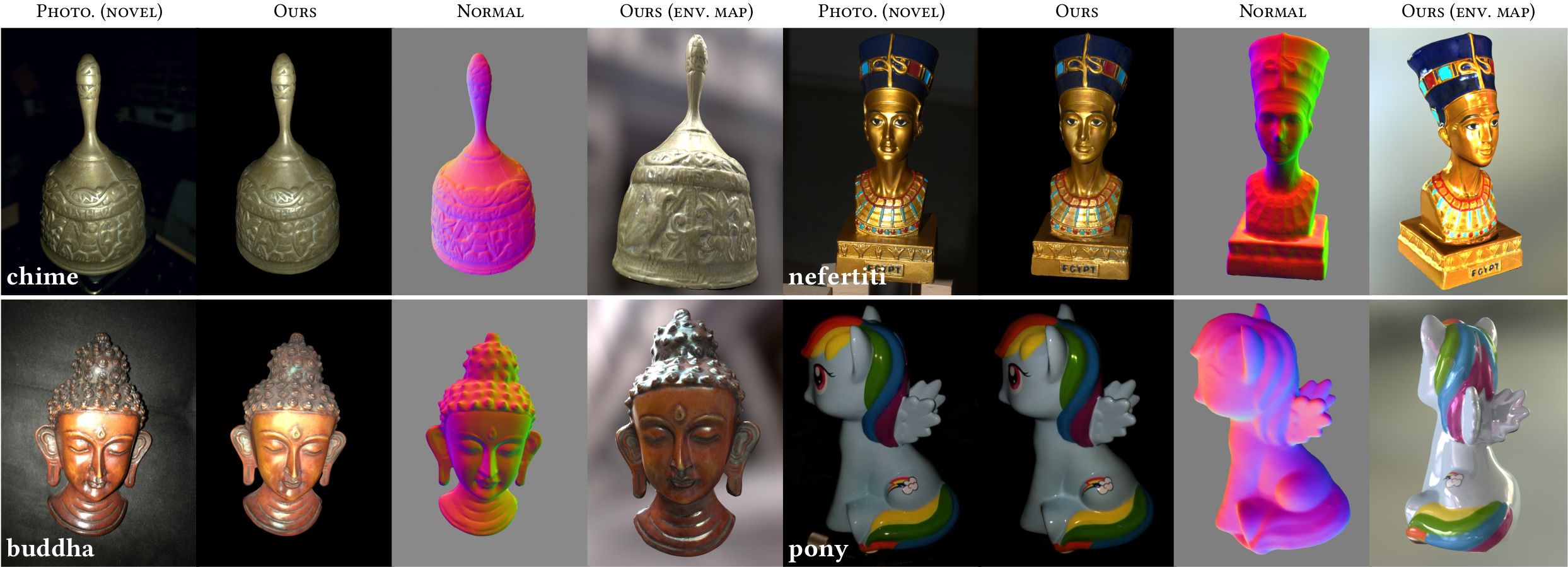

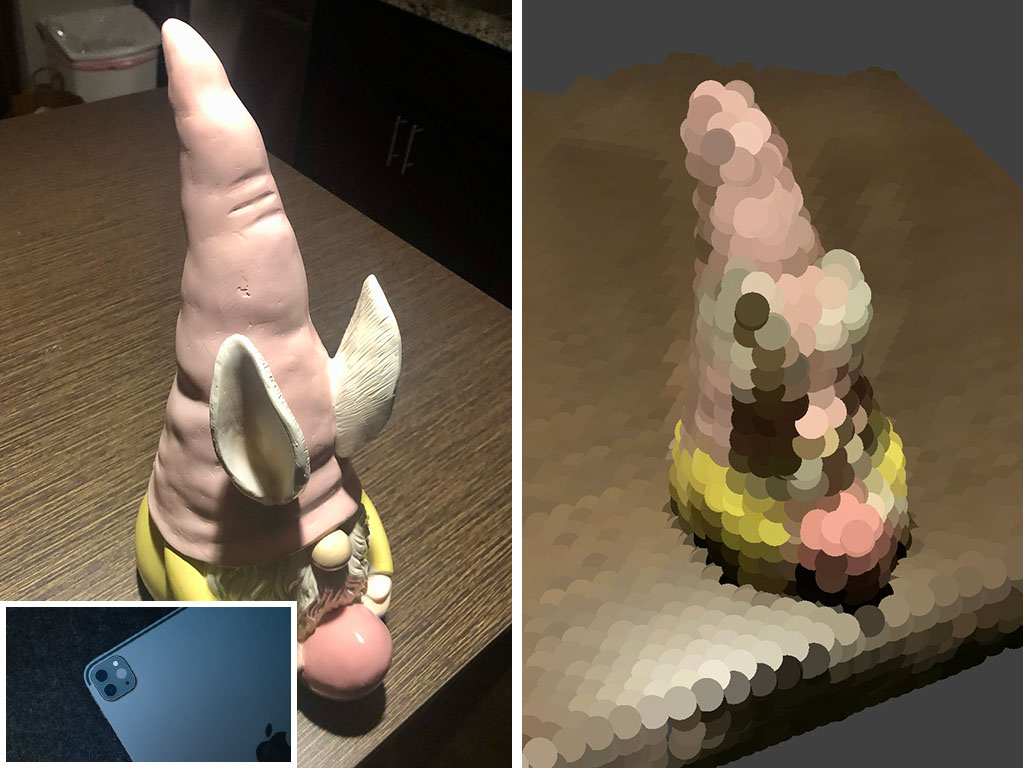

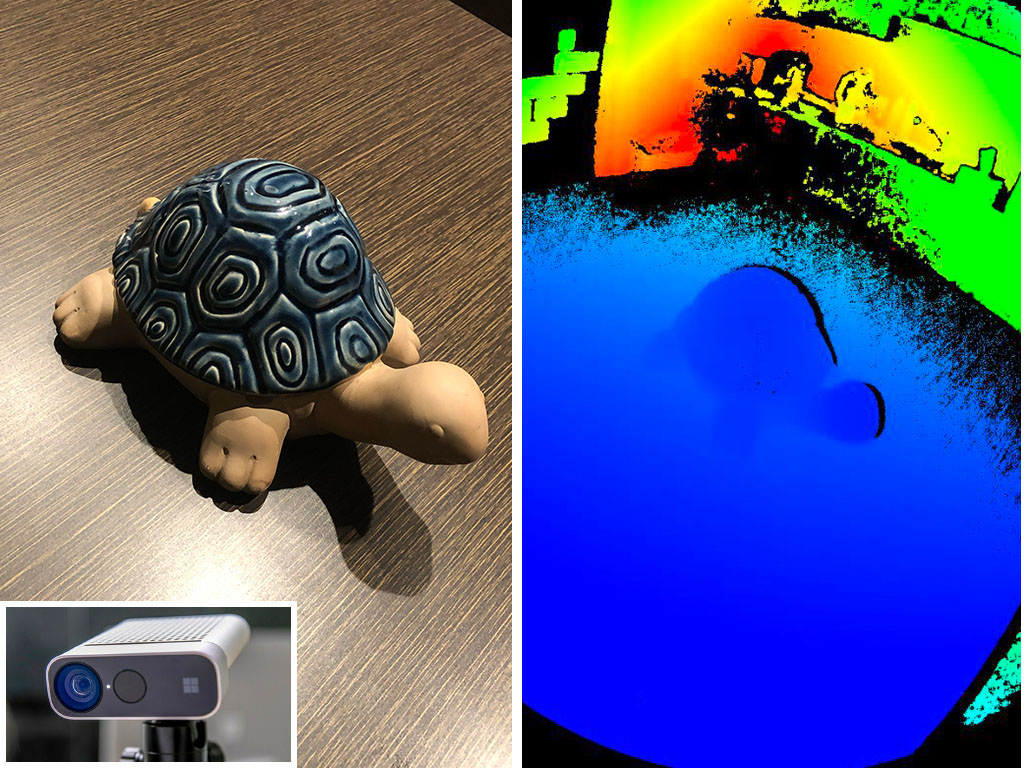

Our approach takes multi-view photos of an object as input and uses Monte Carlo differentiable rendering to jointly reconstruct a set of PBR textures and a triangle mesh, which can easily be re-used in traditional computer graphics pipeline such as a path tracer or AR/VR environment.

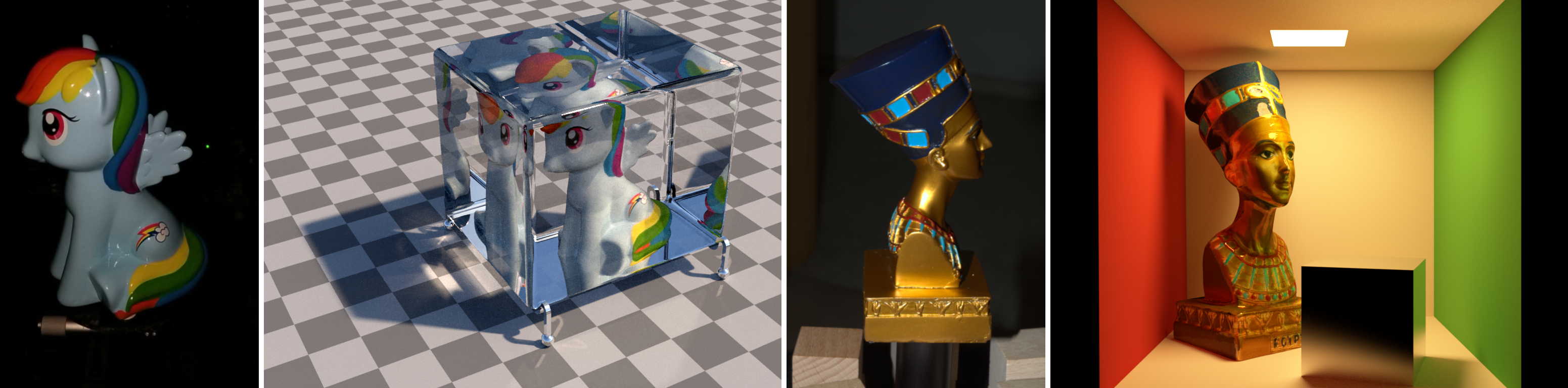

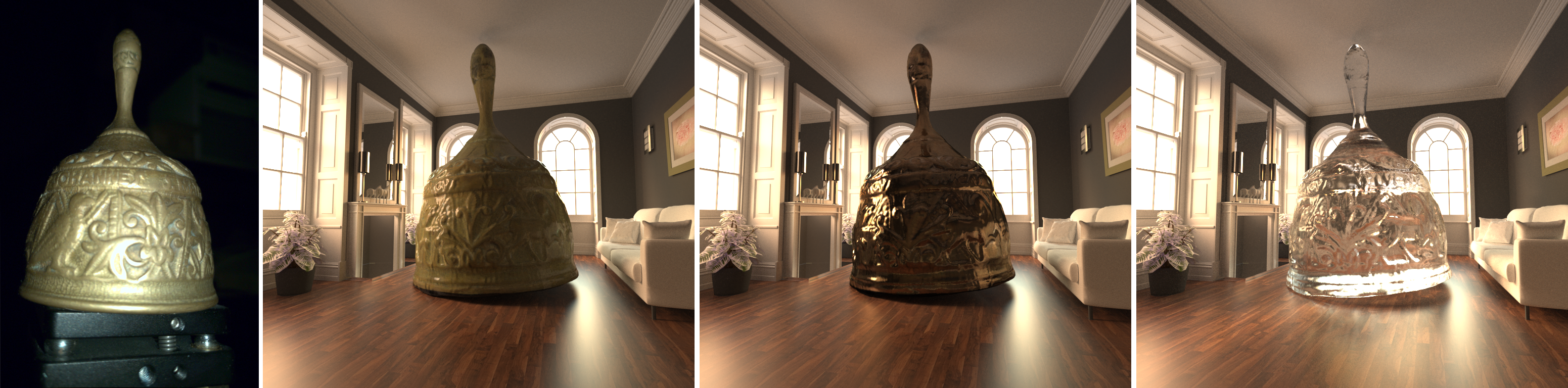

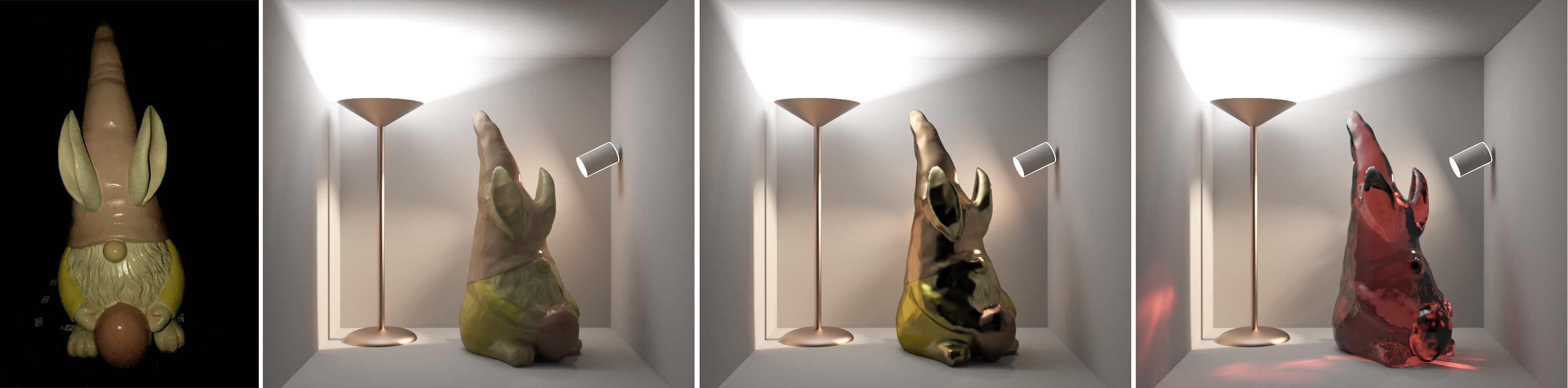

Using our reconstructed 3D models in Blender (Cycle rendering).